____________________________

A Radically Modern Approach to Introductory Physics

Second Edition

Volume 1: Fundamental Principles

David J. Raymond

The New Mexico Tech Press

Socorro, New Mexico, USA

A Radically Modern Approach to Introductory Physics Second Edition

Volume 1: Fundamental Principles

David J. Raymond

Copyright © 2011, 2016 David J. Raymond

Second Edition

Original state, 17 May 2016

Content of this book available under the Creative Commons Attribution-Noncommercial-Share-Alike License. See http://creativecommons.org/licenses/by-nc-sa/3.0/ for details.

Publisher’s Cataloguing-in-Publication Data

OCLC Number: 952471268 (print) – 952469817 (ebook)

Published by The New Mexico Tech Press, a New Mexico nonprofit corporation

This copy printed by CreateSpace, Charleston, SC

The New Mexico Tech Press

Socorro, New Mexico, USA

http://press.nmt.edu

To my wife Georgia and my daughters Maria and Elizabeth.

The idea for a “radically modern” introductory physics course arose out of frustration in the physics department at New Mexico Tech with the standard two-semester treatment of the subject. It is basically impossible to incorporate a significant amount of “modern physics” (meaning post-19th century!) in that format. It seemed to us that largely skipping the “interesting stuff” that has transpired since the days of Einstein and Bohr was like teaching biology without any reference to DNA. We felt at the time (and still feel) that an introductory physics course for non-majors should make an attempt to cover the great accomplishments of physics in the 20th century, since they form such an important part of our scientific culture.

It would, of course, be easy to pander to students – teach them superficially about the things they find interesting, while skipping the “hard stuff”. However, I am convinced that they would ultimately find such an approach as unsatisfying as would the educated physicist. What was needed was a unifying vision which allowed the presentation of all of physics from a modern point of view.

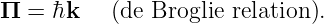

The idea for this course came from reading Louis de Broglie’s Nobel Prize address.1 De Broglie’s work is a masterpiece based on the principles of optics and special relativity, which qualitatively foresees the path taken by Schrödinger and others in the development of quantum mechanics. It thus dawned on me that perhaps optics and waves together with relativity could form a better foundation for all of physics, providing a more interesting way into the subject than classical mechanics.

Whether this is so or not is still a matter of debate, but it is indisputable that such a path is much more fascinating to most college students interested in pursuing studies in physics — especially those who have been through the usual high school treatment of classical mechanics. I am also convinced that the development of physics in these terms, though not historical, is at least as rigorous and coherent as the classical approach.

After 15 years of gradual development, it is clear that the course failed in its original purpose, as a replacement for the standard, one-year introductory physics course with calculus. The material is way too challenging, given the level of interest of the typical non-physics student. However, the course has found a niche at the sophomore level for physics majors (and occasional non-majors with a special interest in physics) to explore some of the ideas that drew them to physics in the first place. It was placed at the sophomore level because we found that having some background in both calculus and introductory college-level physics is advantageous for most students. However, we allow incoming freshmen into the course if they have an appropriate high school background in physics and math.

The course is tightly structured, and it contains little or nothing that can be omitted. However, it is designed to fit into two semesters or three quarters. In broad outline form, the structure is as follows:

A few words about how I have taught the course at New Mexico Tech are in order. As with our standard course, each week contains three lecture hours and a two-hour recitation. The recitation is the key to making the course accessible to the students. I generally have small groups of students working on assigned homework problems during recitation while I wander around giving hints. After all groups have completed their work, a representative from each group explains their problem to the class. The students are then required to write up the problems on their own and hand them in at a later date. The problems are the key to student learning, and associating course credit with the successful solution of these problems insures virtually 100% attendance in recitation.

In addition, chapter reading summaries are required, with the students urged to ask questions about material in the text that gave them difficulties. Significant lecture time is taken up answering these questions. Students tend to do the summaries, as they also count for their grade. The summaries and the questions posed by the students have been quite helpful to me in indicating parts of the text which need clarification.

The writing style of the text is quite terse. This partially reflects its origin in a set of lecture notes, but it also focuses the students’ attention on what is really important. Given this structure, a knowledgeable instructor able to offer one-on-one time with students (as in our recitation sections) is essential for student success. The text is most likely to be useful in a sophomore-level course introducing physics majors to the broad world of physics viewed from a modern perspective.

I freely acknowledge stealing ideas from Edwin Taylor, John Archibald Wheeler, Thomas Moore, Robert Mills, Bruce Sherwood, and many other creative physicists, and I owe a great debt to them. The physics department at New Mexico Tech has been quite supportive of my efforts over the years relative to this course, for which I am exceedingly grateful. Finally, my humble thanks go out to the students who have enthusiastically (or on occasion unenthusiastically) responded to this course. It is much, much better as a result of their input.

My colleagues Alan Blyth, David Westpfahl, Ken Eack, and Sharon Sessions were brave enough to teach this course at various stages of its development, and I welcome the feedback I have received from them. Their experience shows that even seasoned physics teachers require time and effort to come to grips with the content of this textbook!

The reviews of Allan Stavely and Paul Arendt in conjunction with the publication of this book by the New Mexico Tech Press have been enormously helpful, and I am very thankful for their support and enthusiasm. Penny Bencomo and Keegan Livoti taught me a lot about written English with their copy editing.

David J. Raymond

Aside from numerous corrections, clarifications, and minor enhancements, the main additions to this edition include the following:

As in the first edition, I am greatful for the reviews of Paul Arendt and Allan Stavely, who always manage to catch things that I have overlooked.

David J. Raymond

Physics Department

New Mexico Tech

Socorro, NM, USA

djraymondnm@gmail.com

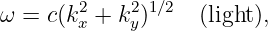

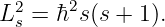

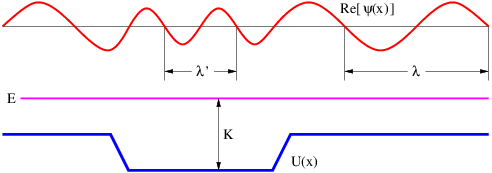

The wave is a universal phenomenon which occurs in a multitude of physical contexts. The purpose of this section is to describe the kinematics of waves, i. e., to provide tools for describing the form and motion of all waves irrespective of their underlying physical mechanisms.

Many examples of waves are well known to you. You undoubtedly know about ocean waves and have probably played with a stretched slinky toy, producing undulations which move rapidly along the slinky. Other examples of waves are sound, vibrations in solids, and light.

In this chapter we learn first about the basic properties of waves and introduce a special type of wave called the sine wave. Examples of waves seen in the real world are presented. We then learn about the superposition principle, which allows us to construct complex wave patterns by superimposing sine waves. Using these ideas, we discuss the related ideas of beats and interferometry. Finally, the ideas of wave packets and group velocity are introduced.

____________________________

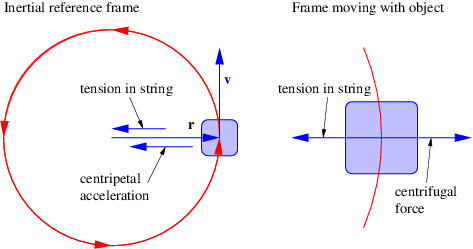

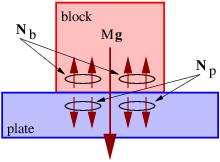

With the exception of light, waves are undulations in a material medium. For instance, ocean waves are (nearly) vertical undulations in the position of water parcels. The oscillations in neighboring parcels are phased such that a pattern moves across the ocean surface. Waves on a slinky are either transverse, in that the motion of the material of the slinky is perpendicular to the orientation of the slinky, or they are longitudinal, with material motion in the direction of the stretched slinky. (See figure 1.1.) Some media support only longitudinal waves, others support only transverse waves, while yet others support both types. Light waves are purely transverse, while sound waves are purely longitudinal. Ocean waves are a peculiar mixture of transverse and longitudinal, with parcels of water moving in elliptical trajectories as waves pass.

Light is a form of electromagnetic radiation. The undulations in an electromagnetic wave occur in the electric and magnetic fields. These oscillations are perpendicular to the direction of motion of the wave (in a vacuum), which is why we call light a transverse wave.

____________________________

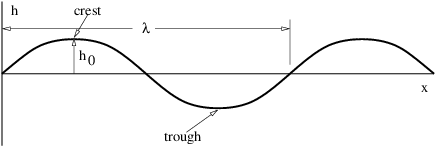

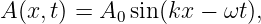

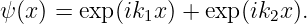

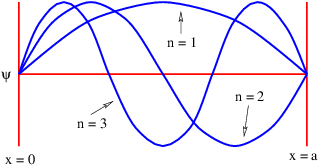

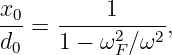

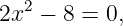

A particularly simple kind of wave, the sine wave, is illustrated in figure 1.2. This has the mathematical form

| (1.1) |

where h is the displacement (which can be either longitudinal or transverse), h0 is the maximum displacement, also called the amplitude of the wave, and λ is the wavelength. The oscillatory behavior of the wave is assumed to carry on to infinity in both positive and negative x directions. Notice that the wavelength is the distance through which the sine function completes one full cycle. The crest and the trough of a wave are the locations of the maximum and minimum displacements, as seen in figure 1.2.

So far we have only considered a sine wave as it appears at a particular time. All interesting waves move with time. The movement of a sine wave to the right a distance d may be accounted for by replacing x in the above formula by x - d. If this movement occurs in time t, then the wave moves at velocity c = d∕t. Solving this for d and substituting yields a formula for the displacement of a sine wave as a function of both distance x and time t:

![h(x,t) = h0sin[2π(x - ct)∕λ].](book11x.png) | (1.2) |

The time for a wave to move one wavelength is called the period of the wave: T = λ∕c. Thus, we can also write

![h(x,t) = h sin[2π(x∕ λ - t∕T )].

0](book12x.png) | (1.3) |

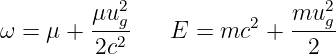

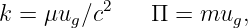

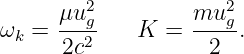

Physicists actually like to write the equation for a sine wave in a slightly simpler form. Defining the wavenumber as k = 2π∕λ and the angular frequency as ω = 2π∕T, we write

| (1.4) |

We normally think of the frequency of oscillatory motion as the number of cycles completed per second. This is called the rotational frequency, and is given by f = 1∕T. It is related to the angular frequency by ω = 2πf. The rotational frequency is usually easier to measure than the angular frequency, but the angular frequency tends to be used more often in theoretical discussions. As shown above, converting between the two is not difficult. Rotational frequency is measured in units of hertz, abbreviated Hz; 1 Hz = 1 cycle s-1. Angular frequency also has the dimensions of inverse time, e. g., radian s-1, but the term “hertz” is generally reserved only for rotational frequency.

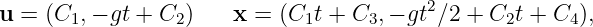

The argument of the sine function is by definition an angle. We refer to this angle as the phase of the wave, ϕ = kx - ωt. The difference in the phase of a wave at fixed time over a distance of one wavelength is 2π, as is the difference in phase at fixed position over a time interval of one wave period.

Since angles are dimensionless, we normally don’t include this in the units for frequency. However, it sometimes clarifies things to refer to the dimensions of rotational frequency as “rotations per second” or angular frequency as “radians per second”.

As previously noted, we call h0, the maximum displacement of the wave, the amplitude. Often we are interested in the intensity of a wave, which is proportional to the square of the amplitude. The intensity is often related to the amount of energy being carried by a wave.

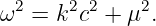

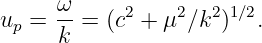

The wave speed we have defined above, c = λ∕T, is actually called the phase speed. Since λ = 2π∕k and T = 2π∕ω, we can write the phase speed in terms of the angular frequency and the wavenumber:

| (1.5) |

In order to make the above material more concrete, we now examine the characteristics of various types of waves which may be observed in the real world.

____________________________

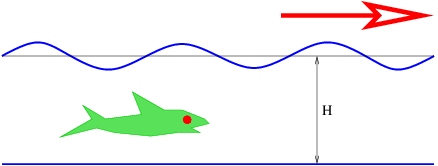

These waves are manifested as undulations of the ocean surface as seen in figure 1.3. The speed of ocean waves is given by the formula

| (1.6) |

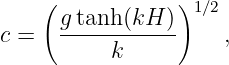

where g = 9.8 m s-2 is the earth’s gravitational force per unit mass, H is the depth of the ocean, and the hyperbolic tangent is defined as1

| (1.7) |

The equation for the speed of ocean waves comes from the theory for oscillations of a fluid surface in a gravitional field.

____________________________

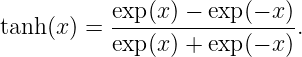

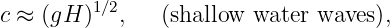

As figure 1.4 shows, for |x|≪ 1, we can approximate the hyperbolic tangent by tanh(x) ≈ x, while for |x|≫ 1 it is +1 for x > 0 and -1 for x < 0. This leads to two limits: Since x = kH, the shallow water limit, which occurs when kH ≪ 1, yields a wave speed of

| (1.8) |

while the deep water limit, which occurs when kH ≫ 1, yields

| (1.9) |

Notice that the speed of shallow water waves depends only on the depth of the water and on g. In other words, all shallow water waves move at the same speed. On the other hand, deep water waves of longer wavelength (and hence smaller wavenumber) move more rapidly than those with shorter wavelength. Waves for which the wave speed varies with wavelength are called dispersive. Thus, deep water waves are dispersive, while shallow water waves are non-dispersive.

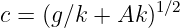

For water waves with wavelengths of a few centimeters or less, surface tension becomes important to the dynamics of the waves. In the deep water case, the wave speed at short wavelengths is given by the formula

| (1.10) |

where the constant A is related to an effect called surface tension. For an air-water interface near room temperature, A ≈ 74 cm3 s-2.

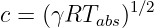

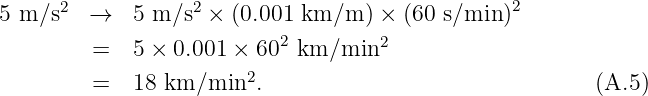

Sound is a longitudinal compression-expansion wave in a fluid. The wave speed for sound in an ideal gas is

| (1.11) |

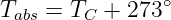

where γ and R are constants and Tabs is the absolute temperature. The absolute temperature is measured in Kelvins and is numerically given by

| (1.12) |

where TC is the temperature in Celsius degrees. The angular frequency of sound waves is thus given by

| (1.13) |

The speed of sound in air at normal temperatures is about 340 m s-1.

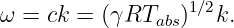

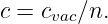

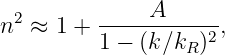

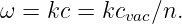

Light moves in a vacuum at a speed of cvac = 3 × 108 m s-1. In transparent materials it moves at a speed less than cvac by a factor n which is called the refractive index of the material:

| (1.14) |

Often the refractive index takes the form

| (1.15) |

where k is the wavenumber and kR and A are positive constants characteristic of the material. The angular frequency of light in a transparent medium is thus

| (1.16) |

It is found empirically that as long as the amplitudes of waves in most media are small, two waves in the same physical location don’t interact with each other. Thus, for example, two waves moving in the opposite direction simply pass through each other without their shapes or amplitudes being changed. When collocated, the total wave displacement is just the sum of the displacements of the individual waves. This is called the superposition principle. At sufficiently large amplitude the superposition principle often breaks down — interacting waves may scatter off of each other, lose amplitude, or change their form.

Interference is a consequence of the superposition principle. When two or more waves are superimposed, the net wave displacement is just the algebraic sum of the displacements of the individual waves. Since these displacements can be positive or negative, the net displacement can either be greater or less than the individual wave displacements. The former case, which occurs when both displacements are of the same sign, is called constructive interference, while destructive interference occurs when they are of opposite sign.

____________________________

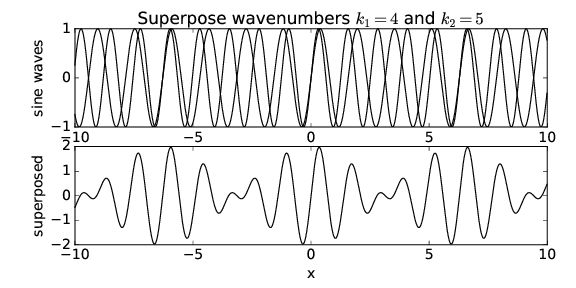

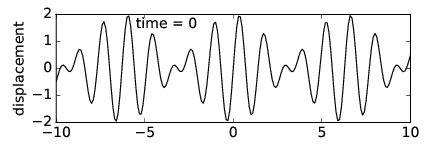

Let us see what happens when we superimpose two sine waves with different wavenumbers. Figure 1.5 shows the superposition of two waves with wavenumbers k1 = 4 and k2 = 5. Notice that the result is a wave with about the same wavelength as the two initial waves, but which varies in amplitude depending on whether the two sine waves are interfering constructively or destructively. We say that the waves are in phase if they are interfering constructively, and they are out of phase if they are interfering destructively.

____________________________

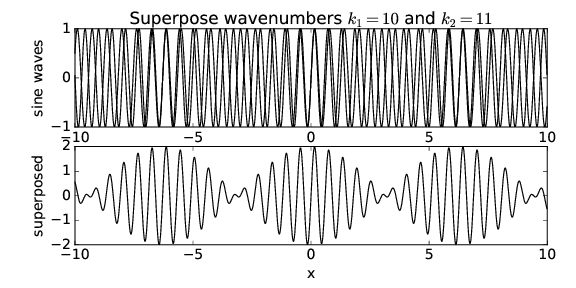

What happens when the wavenumbers of the two sine waves are changed? Figure 1.6 shows the result when k1 = 10 and k2 = 11. Notice that though the wavelength of the resultant wave is decreased, the locations where the amplitude is maximum have the same separation in x as in figure 1.5.

____________________________

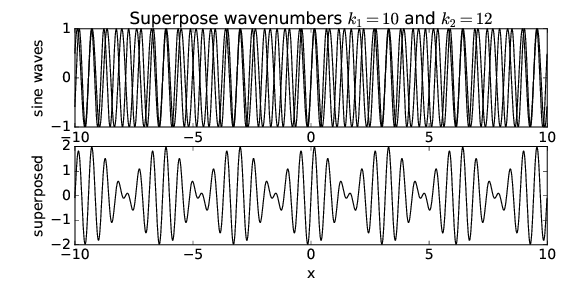

If we superimpose waves with k1 = 10 and k2 = 12, as is shown in figure 1.7, we see that the x spacing of the regions of maximum amplitude has decreased by a factor of two. Thus, while the wavenumber of the resultant wave seems to be related to something like the average of the wavenumbers of the component waves, the spacing between regions of maximum wave amplitude appears to go inversely with the difference of the wavenumbers of the component waves. In other words, if k1 and k2 are close together, the amplitude maxima are far apart and vice versa.

____________________________

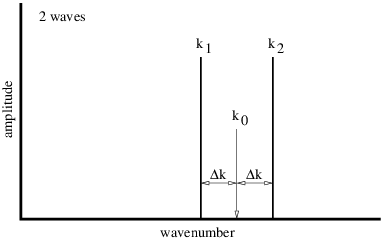

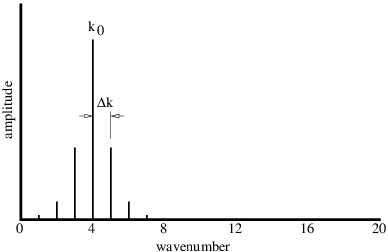

We can symbolically represent the sine waves that make up figures 1.5, 1.6, and 1.7 by a plot such as that shown in figure 1.8. The amplitudes and wavenumbers of each of the sine waves are indicated by vertical lines in this figure.

____________________________

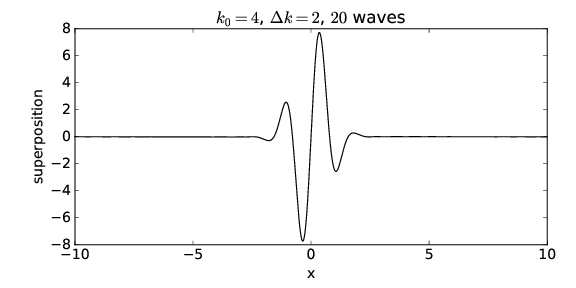

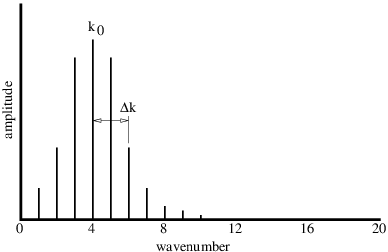

The regions of large wave amplitude are called wave packets. Wave packets will play a central role in what is to follow, so it is important that we acquire a good understanding of them. The wave packets produced by only two sine waves are not well separated along the x-axis. However, if we superimpose many waves, we can produce an isolated wave packet. For example, figure 1.9 shows the results of superimposing 20 sine waves with wavenumbers k = 0.4m, m = 1, 2,…, 20, where the amplitudes of the waves are largest for wavenumbers near k = 4. In particular, we assume that the amplitude of each sine wave is proportional to exp[-(k - k0)2∕Δk2], where k 0 = 4 defines the maximum of the distribution of wavenumbers and Δk = 1 defines the half-width of this distribution. The amplitudes of each of the sine waves making up the wave packet in figure 1.9 are shown schematically in figure 1.10.

____________________________

____________________________

The quantity Δk controls the distribution of the sine waves being superimposed — only those waves with a wavenumber k within approximately Δk of the central wavenumber k0 of the wave packet, i. e., for 3 ≤ k ≤ 5 in this case, contribute significantly to the sum. If Δk is changed to 2, so that wavenumbers in the range 2 ≤ k ≤ 6 contribute significantly, the wavepacket becomes narrower, as is shown in figures 1.11 and 1.12. Δk is called the wavenumber spread of the wave packet, and it evidently plays a role similar to the difference in wavenumbers in the superposition of two sine waves — the larger the wavenumber spread, the smaller the physical size of the wave packet. Furthermore, the wavenumber of the oscillations within the wave packet is given approximately by the central wavenumber.

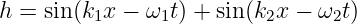

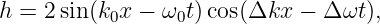

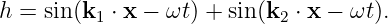

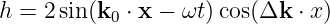

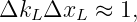

We can better understand how wave packets work by mathematically analyzing the simple case of the superposition of two sine waves. Let us define k0 = (k1 + k2)∕2 where k1 and k2 are the wavenumbers of the component waves. Furthermore let us set Δk = (k2 - k1)∕2. The quantities k0 and Δk are graphically illustrated in figure 1.8. We can write k1 = k0 - Δk and k2 = k0 + Δk and use the trigonometric identity sin(a + b) = sin(a) cos(b) + cos(a) sin(b) to find

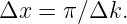

The sine factor on the bottom line of the above equation produces the oscillations within the wave packet, and as speculated earlier, this oscillation has a wavenumber k0 equal to the average of the wavenumbers of the component waves. The cosine factor modulates this wave with a spacing between regions of maximum amplitude of

| (1.18) |

Thus, as we observed in the earlier examples, the length of the wave packet Δx is inversely related to the spread of the wavenumbers Δk (which in this case is just the difference between the two wavenumbers) of the component waves. This relationship is central to the uncertainty principle of quantum mechanics.

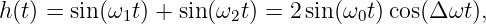

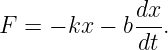

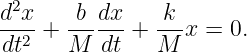

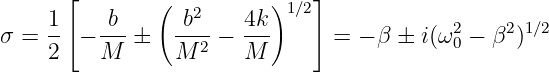

Suppose two sound waves of different frequency but equal amplitude impinge on your ear at the same time. The displacement perceived by your ear is the superposition of these two waves, with time dependence

| (1.19) |

where we have used the above math trick, and where ω0 = (ω1 + ω2)∕2 and Δω = (ω2 - ω1)∕2. What you actually hear is a tone with angular frequency ω0 which fades in and out with period

| (1.20) |

| (1.21) |

Note how beats are the time analog of wave packets — the mathematics are the same except that frequency replaces wavenumber and time replaces space.

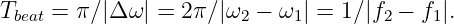

An interferometer is a device which splits a beam of light (or other wave) into two sub-beams, shifts the phase of one sub-beam with respect to the other, and then superimposes the sub-beams so that they interfere constructively or destructively, depending on the magnitude of the phase shift between them. In this section we study the Michelson interferometer and interferometric effects in thin films.

The American physicist Albert Michelson invented the optical interferometer illustrated in figure 1.13. The incoming beam is split into two beams by the half-silvered mirror. Each sub-beam reflects off of another mirror which returns it to the half-silvered mirror, where the two sub-beams recombine as shown. One of the reflecting mirrors is movable by a sensitive micrometer device, allowing the path length of the corresponding sub-beam, and hence the phase relationship between the two sub-beams, to be altered. As figure 1.13 shows, the difference in path length between the two sub-beams is 2x because the horizontal sub-beam traverses the path twice. Thus, constructive interference occurs when this path difference is an integral number of wavelengths, i. e.,

| (1.22) |

where λ is the wavelength of the wave and m is an integer. Note that m is the number of wavelengths that fits evenly into the distance 2x.

____________________________

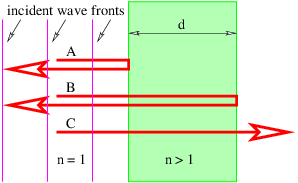

One of the most revealing examples of interference occurs when light interacts with a thin film of transparent material such as a soap bubble. Figure 1.14 shows how a plane wave normally incident on the film is partially reflected by the front and rear surfaces. The waves reflected off the front and rear surfaces of the film interfere with each other. The interference can be either constructive or destructive depending on the phase difference between the two reflected waves.

If the wavelength of the incoming wave is λ, one would naively expect constructive interference to occur between the A and B beams if 2d were an integral multiple of λ.

Two factors complicate this picture. First, the wavelength inside the film is not λ, but λ∕n, where n is the index of refraction of the film. Constructive interference would then occur if 2d = mλ∕n. Second, it turns out that an additional phase shift of half a wavelength occurs upon reflection when the wave is incident on material with a higher index of refraction than the medium in which the incident beam is immersed. This phase shift doesn’t occur when light is reflected from a region with lower index of refraction than felt by the incident beam. Thus beam B doesn’t acquire any additional phase shift upon reflection. As a consequence, constructive interference actually occurs when

| (1.23) |

while destructive interference results when

| (1.24) |

When we look at a soap bubble, we see bands of colors reflected back from a light source. What is the origin of these bands? Light from ordinary sources is generally a mixture of wavelengths ranging from roughly λ = 4.5 × 10-7 m (violet light) to λ = 6.5 × 10-7 m (red light). In between violet and red we also have blue, green, and yellow light, in that order. Because of the different wavelengths associated with different colors, it is clear that for a mixed light source we will have some colors interfering constructively while others interfere destructively. Those undergoing constructive interference will be visible in reflection, while those undergoing destructive interference will not.

Another factor enters as well. If the light is not normally incident on the film, the difference in the distances traveled between beams reflected off of the front and rear faces of the film will not be just twice the thickness of the film. To understand this case quantitatively, we need the concept of refraction, which will be developed later in the context of geometrical optics. However, it should be clear that different wavelengths will undergo constructive interference for different angles of incidence of the incoming light. Different portions of the thin film will in general be viewed at different angles, and will therefore exhibit different colors under reflection, resulting in the colorful patterns normally seen in soap bubbles.

____________________________

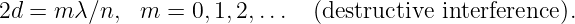

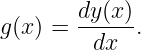

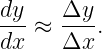

This section provides a quick review of the idea of the derivative. Often we are interested in the slope of a line tangent to a function y(x) at some value of x. This slope is called the derivative and is denoted dy∕dx. Since a tangent line to the function can be defined at any point x, the derivative itself is a function of x:

| (1.25) |

As figure 1.15 illustrates, the slope of the tangent line at some point on the function may be approximated by the slope of a line connecting two points, A and B, set a finite distance apart on the curve:

| (1.26) |

As B is moved closer to A, the approximation becomes better. In the limit when B moves infinitely close to A, it is exact.

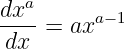

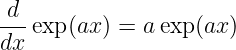

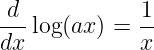

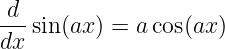

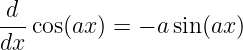

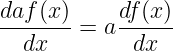

Derivatives of some common functions are now given. In each case a is a constant.

| (1.27) |

| (1.28) |

| (1.29) |

| (1.30) |

| (1.31) |

| (1.32) |

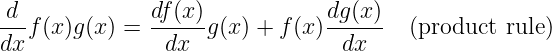

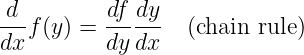

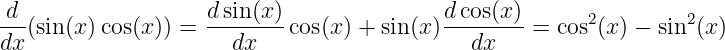

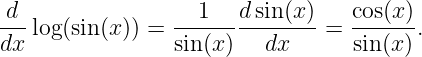

![d df (x ) dg(x)

---[f (x ) + g (x )] =-----+ ------

dx dx dx](book132x.png) | (1.33) |

| (1.34) |

| (1.35) |

The product and chain rules are used to compute the derivatives of complex functions. For instance,

and

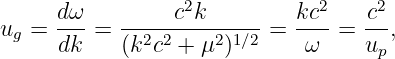

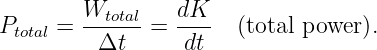

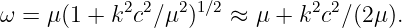

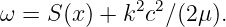

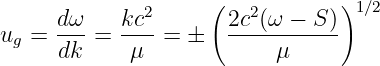

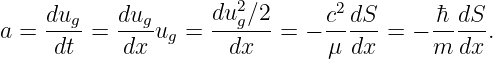

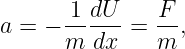

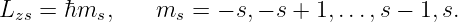

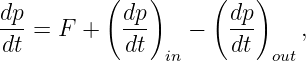

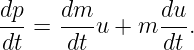

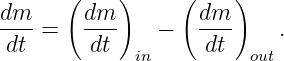

We now ask the following question: How fast do wave packets move? Surprisingly, we often find that wave packets move at a speed very different from the phase speed, c = ω∕k, of the wave composing the wave packet.

We shall find that the speed of motion of wave packets, referred to as the group velocity, is given by

| (1.36) |

The derivative of ω(k) with respect to k is first computed and then evaluated at k = k0, the central wavenumber of the wave packet of interest.

The relationship between the angular frequency and the wavenumber for a wave, ω = ω(k), depends on the type of wave being considered. Whatever this relationship turns out to be in a particular case, it is called the dispersion relation for the type of wave in question.

As an example of a group velocity calculation, suppose we want to find the velocity of deep ocean wave packets for a central wavelength of λ0 = 60 m. This corresponds to a central wavenumber of k0 = 2π∕λ0 ≈ 0.1 m-1. The phase speed of deep ocean waves is c = (g∕k)1∕2. However, since c ≡ ω∕k, we find the frequency of deep ocean waves to be ω = (gk)1∕2. The group velocity is therefore u ≡ dω∕dk = (g∕k)1∕2∕2 = c∕2. For the specified central wavenumber, we find that u ≈ (9.8 m s-2∕0.1 m-1)1∕2∕2 ≈ 5 m s-1. By contrast, the phase speed of deep ocean waves with this wavelength is c ≈ 10 m s-1.

Dispersive waves are waves in which the phase speed varies with wavenumber. It is easy to show that dispersive waves have unequal phase and group velocities, while these velocities are equal for non-dispersive waves.

____________________________

We now derive equation (1.36). It is easiest to do this for the simplest wave packets, namely those constructed out of the superposition of just two sine waves. We will proceed by adding two waves with full space and time dependence:

| (1.37) |

After algebraic and trigonometric manipulations familiar from earlier sections, we find

| (1.38) |

where as before we have k0 = (k1 + k2)∕2, ω0 = (ω1 + ω2)∕2, Δk = (k2 - k1)∕2, and Δω = (ω2 - ω1)∕2.

Again think of this as a sine wave of frequency ω0 and wavenumber k0 modulated by a cosine function. In this case the modulation pattern moves with a speed so as to keep the argument of the cosine function constant:

| (1.39) |

Differentiating this with respect to t while holding Δk and Δω constant yields

| (1.40) |

In the limit in which the deltas become very small, this reduces to the derivative

| (1.41) |

which is the desired result.

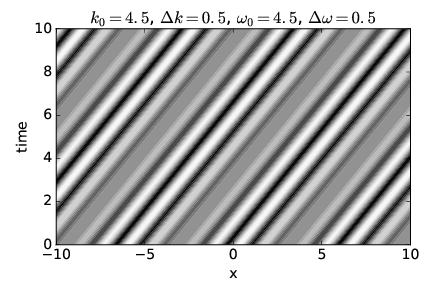

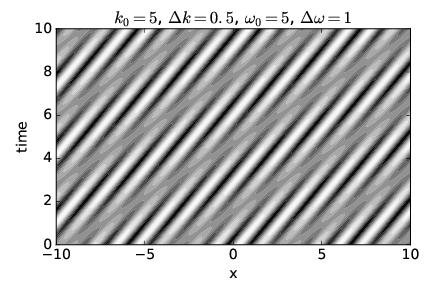

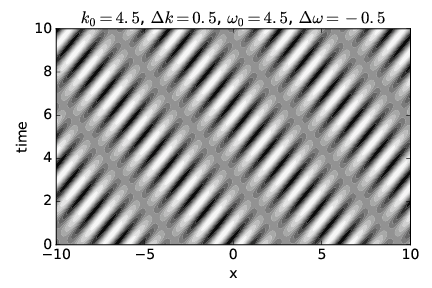

We now illustrate some examples of phase speed and group velocity by showing the displacement resulting from the superposition of two sine waves, as given by equation (1.38), in the x-t plane. This is an example of a spacetime diagram, of which we will see many examples later on.

____________________________

____________________________

The upper panel of figure 1.16 shows a non-dispersive case in which the phase speed equals the group velocity. The white and black regions indicate respectively strong wave crests and troughs (i. e., regions of large positive and negative displacements), with grays indicating a displacement near zero. Regions with large displacements indicate the location of wave packets. The positions of waves and wave packets at any given time may therefore be determined by drawing a horizontal line across the graph at the desired time and examining the variations in wave displacement along this line. The lower panel of this figure shows the wave displacement as a function of x at time t = 0 as an aid to interpretation of the upper panel.

Notice that as time increases, the crests move to the right. This corresponds to the motion of the waves within the wave packets. Note also that the wave packets, i. e., the broad regions of large positive and negative amplitudes, move to the right with increasing time as well.

Since velocity is distance moved Δx divided by elapsed time Δt, the slope of a line in figure 1.16, Δt∕Δx, is one over the velocity of whatever that line represents. The slopes of lines representing crests are the same as the slopes of lines representing wave packets in this case, which indicates that the two move at the same velocity. Since the speed of movement of wave crests is the phase speed and the speed of movement of wave packets is the group velocity, the two velocities are equal and the non-dispersive nature of this case is confirmed.

Figure 1.17 shows a dispersive wave in which the group velocity is twice the phase speed, while figure 1.18 shows a case in which the group velocity is actually opposite in sign to the phase speed. See if you can confirm that the phase and group velocities seen in each figure correspond to the values for these quantities calculated from the specified frequencies and wavenumbers.

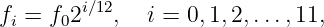

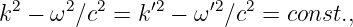

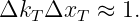

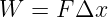

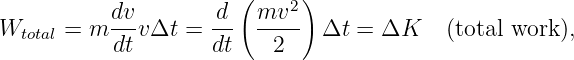

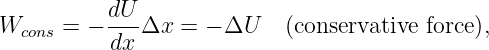

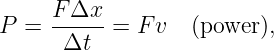

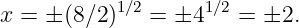

| (1.42) |

where f0 is a constant equal to the frequency of the lowest note in the scale.

| ω (s-1) | k (m-1) |

| 5 | 1 |

| 20 | 2 |

| 45 | 3 |

| 80 | 4 |

| 125 | 5 |

In this chapter we extend the ideas of the previous chapter to the case of waves in more than one dimension. The extension of the sine wave to higher dimensions is the plane wave. Wave packets in two and three dimensions arise when plane waves moving in different directions are superimposed.

Diffraction results from the disruption of a wave which is impingent upon an object. Those parts of the wave front hitting the object are scattered, modified, or destroyed. The resulting diffraction pattern comes from the subsequent interference of the various pieces of the modified wave. A knowledge of diffraction is necessary to understand the behavior and limitations of optical instruments such as telescopes.

Diffraction and interference in two and three dimensions can be manipulated to produce useful devices such as the diffraction grating.

____________________________

____________________________

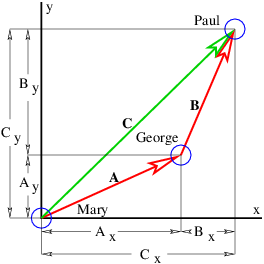

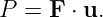

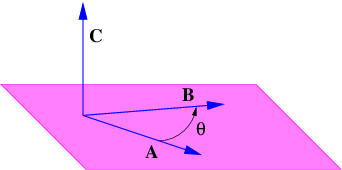

Before we can proceed further we need to explore the idea of a vector. A vector is a quantity which expresses both magnitude and direction. Graphically we represent a vector as an arrow. In typeset notation a vector is represented by a boldface character, while in handwriting an arrow is drawn over the character representing the vector.

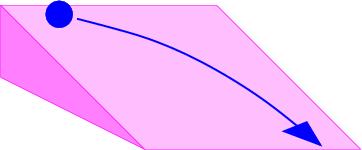

Figure 2.1 shows some examples of displacement vectors, i. e., vectors which represent the displacement of one object from another, and introduces the idea of vector addition. The tail of vector B is collocated with the head of vector A, and the vector which stretches from the tail of A to the head of B is the sum of A and B, called C in figure 2.1.

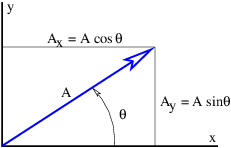

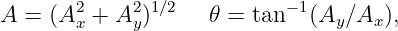

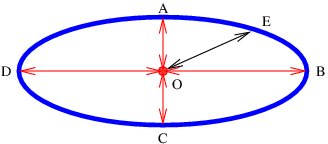

The quantities Ax, Ay, etc., represent the Cartesian components of the vectors in figure 2.1. A vector can be represented either by its Cartesian components, which are just the projections of the vector onto the Cartesian coordinate axes, or by its direction and magnitude. The direction of a vector in two dimensions is generally represented by the counterclockwise angle of the vector relative to the x axis, as shown in figure 2.2. Conversion from one form to the other is given by the equations

| (2.1) |

| (2.2) |

where A is the magnitude of the vector. A vector magnitude is sometimes represented by absolute value notation: A ≡|A|.

Notice that the inverse tangent gives a result which is ambiguous relative to adding or subtracting integer multiples of π. Thus the quadrant in which the angle lies must be resolved by independently examining the signs of Ax and Ay and choosing the appropriate value of θ.

To add two vectors, A and B, it is easiest to convert them to Cartesian component form. The components of the sum C = A + B are then just the sums of the components:

| (2.3) |

Subtraction of vectors is done similarly, e. g., if A = C - B, then

| (2.4) |

A unit vector is a vector of unit length. One can always construct a unit vector from an ordinary (non-zero) vector by dividing the vector by its length: n = A∕|A|. This division operation is carried out by dividing each of the vector components by the number in the denominator. Alternatively, if the vector is expressed in terms of length and direction, the magnitude of the vector is divided by the denominator and the direction is unchanged.

Unit vectors can be used to define a Cartesian coordinate system. Conventionally, i, j, and k indicate the x, y, and z axes of such a system. Note that i, j, and k are mutually perpendicular. Any vector can be represented in terms of unit vectors and its Cartesian components: A = Axi + Ayj + Azk. An alternate way to represent a vector is as a list of components: A = (Ax,Ay,Az). We tend to use the latter representation since it is somewhat more economical notation.

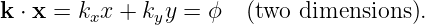

There are two ways to multiply two vectors, yielding respectively what are known as the dot product and the cross product. The cross product yields another vector while the dot product yields a number. Here we will discuss only the dot product. The cross product will be presented later when it is needed.

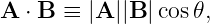

Given vectors A and B, the dot product of the two is defined as

| (2.5) |

where θ is the angle between the two vectors. In two dimensions an alternate expression for the dot product exists in terms of the Cartesian components of the vectors:

| (2.6) |

It is easy to show that this is equivalent to the cosine form of the dot product when the x axis lies along one of the vectors, as in figure 2.3. Notice in particular that Ax = |A| cos θ, while Bx = |B| and By = 0. Thus, A ⋅ B = |A| cos θ|B| in this case, which is identical to the form given in equation (2.5).

____________________________

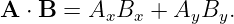

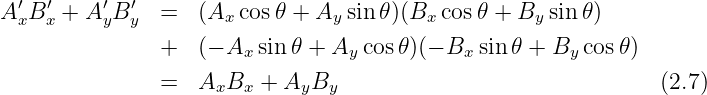

All that remains to be proven for equation (2.6) to hold in general is to show that it yields the same answer regardless of how the Cartesian coordinate system is oriented relative to the vectors. To do this, we must show that AxBx + AyBy = Ax′Bx′ + Ay′By′, where the primes indicate components in a coordinate system rotated from the original coordinate system.

Figure 2.4 shows the vector R resolved in two coordinate systems rotated with respect to each other. From this figure it is clear that X′ = a + b. Focusing on the shaded triangles, we see that a = X cos θ and b = Y sin θ. Thus, we find X′ = X cos θ + Y sin θ. Similar reasoning shows that Y ′ = -X sin θ + Y cos θ. Substituting these and using the trigonometric identity cos 2θ + sin 2θ = 1 results in

A numerical quantity that doesn’t depend on which coordinate system is being used is called a scalar. The dot product of two vectors is a scalar. However, the components of a vector, taken individually, are not scalars, since the components change as the coordinate system changes. Since the laws of physics cannot depend on the choice of coordinate system being used, we insist that physical laws be expressed in terms of scalars and vectors, but not in terms of the components of vectors.

In three dimensions the cosine form of the dot product remains the same, while the component form is

| (2.8) |

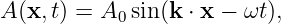

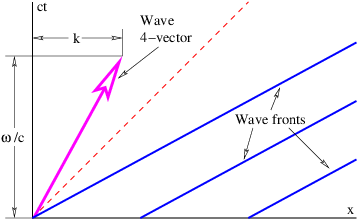

____________________________

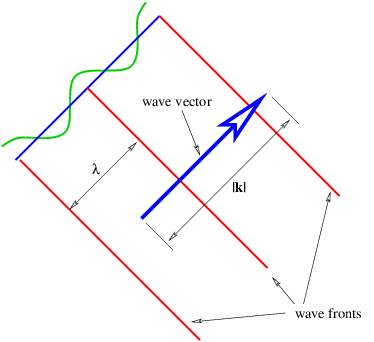

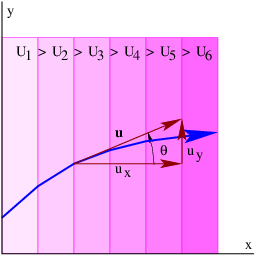

A plane wave in two or three dimensions is like a sine wave in one dimension except that crests and troughs aren’t points, but form lines (2-D) or planes (3-D) perpendicular to the direction of wave propagation. Figure 2.5 shows a plane sine wave in two dimensions. The large arrow is a vector called the wave vector, which defines (1) the direction of wave propagation by its orientation perpendicular to the wave fronts, and (2) the wavenumber by its length. We can think of a wave front as a line along the crest of the wave. The equation for the displacement associated with a plane sine wave (of unit amplitude) in three dimensions at some instant in time is

| (2.9) |

Since wave fronts are lines or surfaces of constant phase, the equation defining a wave front is simply k ⋅ x = const.

In the two dimensional case we simply set kz = 0. Therefore, a wave front, or line of constant phase ϕ in two dimensions is defined by the equation

| (2.10) |

This can be easily solved for y to obtain the slope and intercept of the wave front in two dimensions.

As for one dimensional waves, the time evolution of the wave is obtained by adding a term -ωt to the phase of the wave. In three dimensions the wave displacement as a function of both space and time is given by

| (2.11) |

The frequency depends in general on all three components of the wave vector. The form of this function, ω = ω(kx,ky,kz), which as in the one dimensional case is called the dispersion relation, contains information about the physical behavior of the wave.

____________________________

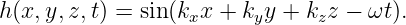

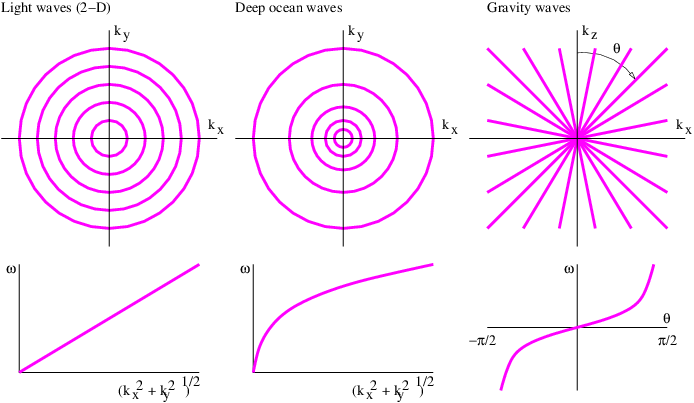

Some examples of dispersion relations for waves in two dimensions are as follows:

| (2.12) |

where c is the speed of light in a vacuum.

| (2.13) |

where g is the strength of the Earth’s gravitational field as before.

| (2.14) |

where N is a constant with the dimensions of inverse time called the Brunt-Väisälä frequency.

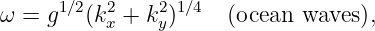

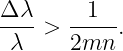

Contour plots of these dispersion relations are plotted in the upper panels of figure 2.6. These plots are to be interpreted like topographic maps, where the lines represent contours of constant elevation. In the case of figure 2.6, constant values of frequency are represented instead. For simplicity, the actual values of frequency are not labeled on the contour plots, but are represented in the graphs in the lower panels. This is possible because frequency depends only on wave vector magnitude (kx2 + k y2)1∕2 for the first two examples, and only on wave vector direction θ for the third.

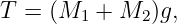

We now study wave packets in two dimensions by asking what the superposition of two plane sine waves looks like. If the two waves have different wavenumbers, but their wave vectors point in the same direction, the results are identical to those presented in the previous chapter, except that the wave packets are indefinitely elongated without change in form in the direction perpendicular to the wave vector. The wave packets produced in this case move in the direction of the wave vectors and thus appear to a stationary observer like a series of passing pulses with broad lateral extent.

Superimposing two plane waves which have the same frequency results in a stationary wave packet through which the individual wave fronts pass. This wave packet is also elongated indefinitely in some direction, but the direction of elongation depends on the dispersion relation for the waves being considered. These wave packets are in the form of steady beams, which guide the individual phase waves in some direction, but don’t themselves change with time. By superimposing multiple plane waves, all with the same frequency, one can actually produce a single stationary beam, just as one can produce an isolated pulse by superimposing multiple waves with wave vectors pointing in the same direction.

If the frequency of a wave depends on the magnitude of the wave vector, but not on its direction, the wave’s dispersion relation is called isotropic; otherwise it is anisotropic. In the isotropic case, two waves have the same frequency only if the lengths of their wave vectors, and hence their wavelengths, are the same. The first two examples in figure 2.6 satisfy this condition, while the last example is anisotropic.

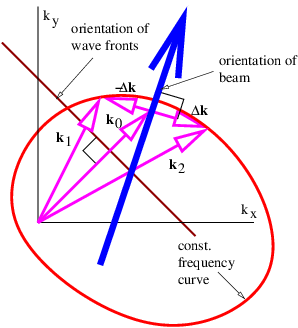

____________________________

____________________________

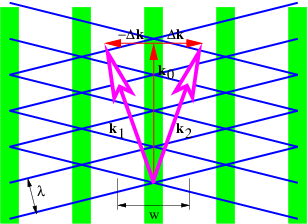

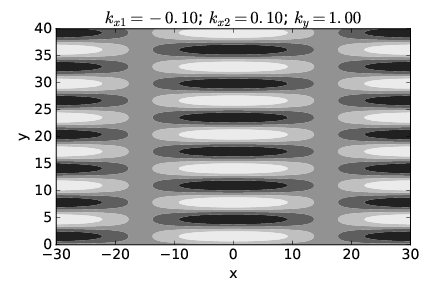

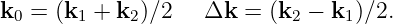

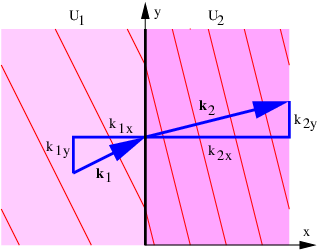

We now use the language of vectors to investigate the superposition of two plane waves with wave vectors k1 and k2:

| (2.15) |

Applying the trigonometric identity for the sine of the sum of two angles (as we have done previously), equation (2.15) can be reduced to

| (2.16) |

where

| (2.17) |

This is in the form of a sine wave moving in the k0 direction with phase speed cphase = ω∕|k0| and wavenumber |k0|, modulated in the Δk direction by a cosine function. The lines of destructive interference are normal to Δk. The distance w between lines of destructive interference is the distance between successive zeros of the cosine function in equation (2.16), implying that |Δk|w = π, which leads to

| (2.18) |

Thus, the smaller |Δk|, the greater is the beam diameter.

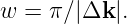

In this section we investigate the beams produced by superimposing isotropic waves of the same frequency. Figure 2.7 illustrates what happens in such a superposition. Vectors k1 and k2 of equal length give rise to a mean wave vector k0 and half the difference, Δk. As illustrated, the lines of constructive and destructive interference are perpendicular to Δk. Figure 2.8 shows a concrete example of the beams produced by superposition of two plane waves of equal wavelength oriented as in figure 2.7. The beams are aligned vertically, since Δk is horizontal, with the lines of destructive interference separating the beams located near x = ±16. The transverse width of the beams of ≈ 32 satisfies equation (2.18) with |Δk| = 0.1. Each beam is made up of vertically propagating phase waves, with the crests and troughs indicated by the regions of white and black.

In the third example of figure 2.6, the frequency of the wave depends only on the direction of the wave vector, independent of its magnitude, which is the reverse of the case for an isotropic dispersion relation. In this highly anisotropic case, different plane waves with the same frequency have wave vectors which point in the same direction, but have different lengths.

____________________________

More generally, one might have waves for which the frequency depends on both the direction and magnitude of the wave vector. In this case, two different plane waves with the same frequency would typically have wave vectors which differ both in direction and magnitude. Such an example is illustrated in figures 2.9 and 2.10.

____________________________

____________________________

Figure 2.11 summarizes what we have learned about adding plane waves with the same frequency. In general, the beam orientation (and the lines of constructive interference) are not perpendicular to the wave fronts. This only occurs when the wave frequency is independent of wave vector direction.

As with wave packets in one dimension, we can add together more than two waves to produce an isolated wave packet. We will confine our attention here to the case of an isotropic dispersion relation in which all the wave vectors for a given frequency are of the same length.

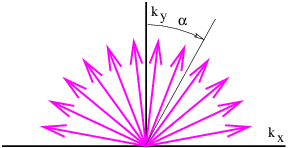

____________________________

Figure 2.12 shows an example of this in which wave vectors of the same wavelength but different directions are added together. Defining αi as the angle of the ith wave vector clockwise from the vertical, as illustrated in figure 2.12, we could write the superposition of these waves at time t = 0 as

where we have assumed that kxi = k sin(αi) and kyi = k cos(αi). The parameter k = |k| is the magnitude of the wave vector and is the same for all the waves. Let us also assume in this example that the amplitude of each wave component decreases with increasing |αi|:

![hi = exp[- (αi∕ αmax)2].](book163x.png) | (2.20) |

The exponential function decreases rapidly as its argument becomes more negative, and for practical purposes, only wave vectors with |αi|≤ αmax contribute significantly to the sum. We call αmax the spreading angle.

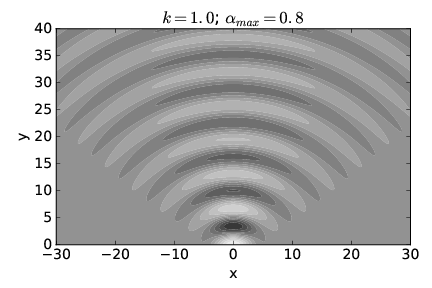

____________________________

Figure 2.13 shows what h(x,y) looks like when αmax = 0.8 radians and k = 1. Notice that for y = 0 the wave amplitude is only large for a small region in the range -4 < x < 4. However, for y > 0 the wave spreads into a broad, semicircular pattern.

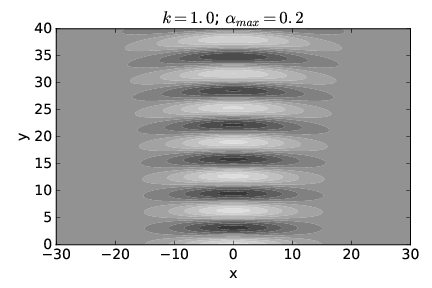

____________________________

Figure 2.14 shows the computed pattern of h(x,y) when the spreading angle αmax = 0.2 radians. The wave amplitude is large for a much broader range of x at y = 0 in this case, roughly -12 < x < 12. On the other hand, the subsequent spread of the wave is much smaller than in the case of figure 2.13.

We conclude that a superposition of plane waves with wave vectors spread narrowly about a central wave vector which points in the y direction (as in figure 2.14) produces a beam which is initially broad in x but for which the breadth increases only slightly with increasing y. However, a superposition of plane waves with wave vectors spread more broadly (as in figure 2.13) produces a beam which is initially narrow in x but which rapidly increases in width as y increases.

The relationship between the spreading angle αmax and the initial breadth of the beam is made more understandable by comparison with the results for the two-wave superposition discussed at the beginning of this section. As indicated by equation (2.18), large values of kx, and hence α, are associated with small wave packet dimensions in the x direction and vice versa. The superposition of two waves doesn’t capture the subsequent spread of the beam which occurs when many waves are superimposed, but it does lead to a rough quantitative relationship between αmax (which is just tan -1(k x∕ky) in the two wave case) and the initial breadth of the beam. If we invoke the small angle approximation for α = αmax so that αmax = tan -1(k x∕ky) ≈ kx∕ky ≈ kx∕k, then kx ≈ kαmax and equation (2.18) can be written w = π∕kx ≈ π∕(kαmax) = λ∕(2αmax). Thus, we can find the approximate spreading angle from the wavelength of the wave λ and the initial breadth of the beam w:

| (2.21) |

____________________________

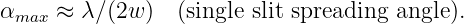

How does all of this apply to the passage of waves through a slit? Imagine a plane wave of wavelength λ impingent on a barrier with a slit. The barrier transforms the plane wave with infinite extent in the lateral direction into a beam with initial transverse dimensions equal to the width of the slit. The subsequent development of the beam is illustrated in figures 2.13 and 2.14, and schematically in figure 2.15. In particular, if the slit width is comparable to the wavelength, the beam spreads broadly as in figure 2.13. If the slit width is large compared to the wavelength, the beam doesn’t spread as much, as figure 2.14 illustrates. Equation (2.21) gives us an approximate quantitative result for the spreading angle if w is interpreted as the width of the slit.

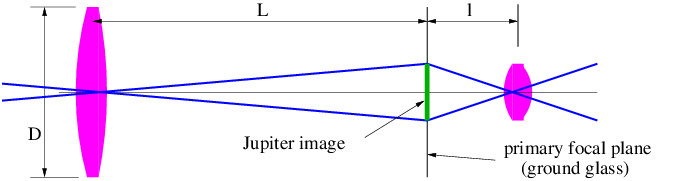

One use of the above equation is in determining the maximum angular resolution of optical instruments such as telescopes. The primary lens or mirror can be thought of as a rather large “slit”. Light from a distant point source is essentially in the form of a plane wave when it arrives at the telescope. However, the light passed by the telescope is no longer a plane wave, but is a beam with a tendency to spread. The spreading angle αmax is given by equation (2.21), and the telescope cannot resolve objects with an angular separation less than αmax. Replacing w with the diameter of the lens or mirror in equation (2.21) thus yields the telescope’s angular resolution. For instance, a moderate sized telescope with aperture 1 m observing red light with λ ≈ 6 × 10-7 m has a maximum angular resolution of about 3 × 10-7 radians.

____________________________

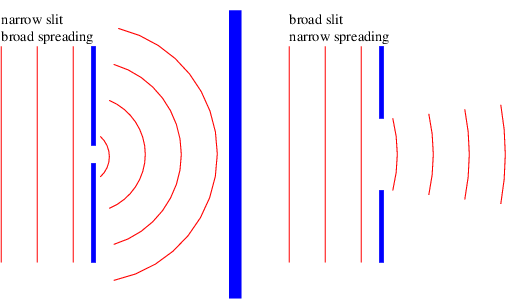

Let us now imagine a plane sine wave normally impingent on a screen with two narrow slits spaced by a distance d, as shown in figure 2.16. Since the slits are narrow relative to the wavelength of the wave impingent on them, the spreading angle of the beams is large and the diffraction pattern from each slit individually is a cylindrical wave spreading out in all directions, as illustrated in figure 2.13. The cylindrical waves from the two slits interfere, resulting in oscillations in wave intensity at the screen on the right side of figure 2.16.

Constructive interference occurs when the difference in the paths traveled by the two waves from their originating slits to the screen, L2 - L1, is an integer multiple m of the wavelength λ: L2 - L1 = mλ. If L0 ≫ d, the lines L1 and L2 are nearly parallel, which means that the narrow end of the dark triangle in figure 2.16 has an opening angle of θ. Thus, the path difference between the beams from the two slits is L2 - L1 = d sin θ. Substitution of this into the above equation shows that constructive interference occurs when

| (2.22) |

Destructive interference occurs when m is an integer plus 1∕2. The integer m is called the interference order and is the number of wavelengths by which the two paths differ.

Since the angular spacing Δθ of interference peaks in the two slit case depends on the wavelength of the incident wave, the two slit system can be used as a crude device to distinguish between the wavelengths of different components of a non-sinusoidal wave impingent on the slits. However, if more slits are added, maintaining a uniform spacing d between slits, we obtain a more sophisticated device for distinguishing beam components. This is called a diffraction grating.

____________________________

____________________________

____________________________

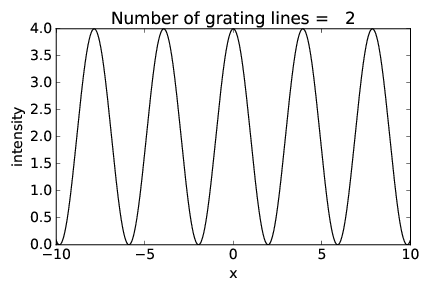

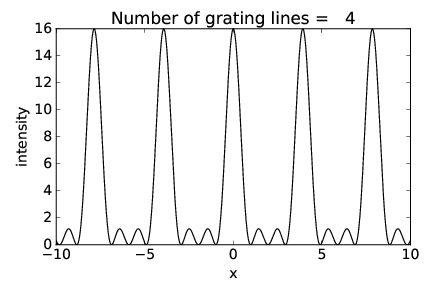

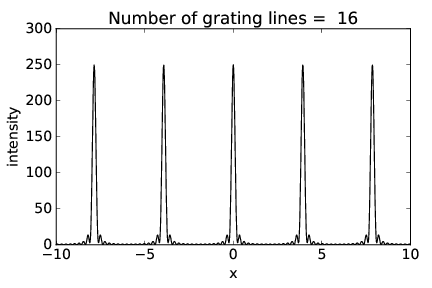

Figures 2.17-2.19 show the intensity of the diffraction pattern as a function of position x on the display screen (see figure 2.16) for gratings with 2, 4, and 16 slits respectively, with the same slit spacing. Notice how the interference peaks remain in the same place but increase in sharpness as the number of slits increases.

The width of the peaks is actually related to the overall width of the grating, w = nd, where n is the number of slits. Thinking of this width as the dimension of a large single slit, the single slit equation, αmax = λ∕(2w), tells us the angular width of the peaks.2

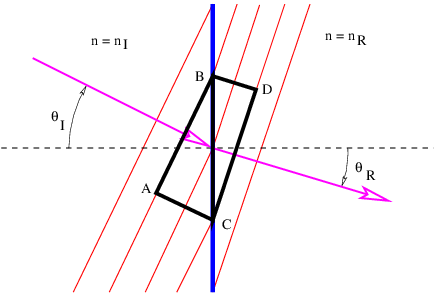

Whereas the angular width of the interference peaks is governed by the single slit equation, their angular positions are governed by the two slit equation. Let us assume for simplicity that |θ|≪ 1 so that we can make the small angle approximation to the two slit equation, mλ = d sin θ ≈ dθ, and ask the following question: How different do two wavelengths differing by Δλ have to be in order that the interference peaks from the two waves not overlap? In order for the peaks to be distinguishable, they should be separated in θ by an angle Δθ = mΔλ∕d, which is greater than the angular width of each peak, αmax:

| (2.23) |

Substituting in the above expressions for Δθ and αmax and solving for Δλ, we get Δλ > λ∕(2mn), where λ is the average of the two wavelengths and n = w∕d is the number of slits in the diffraction grating. Thus, the fractional difference between wavelengths which can be distinguished by a diffraction grating depends solely on the interference order m and the number of slits n in the grating:

| (2.24) |

_____________________________________

As was shown previously, when a plane wave is impingent on an aperture which has dimensions much greater than the wavelength of the wave, diffraction effects are minimal and a segment of the plane wave passes through the aperture essentially unaltered. This plane wave segment can be thought of as a wave packet, called a beam or ray, consisting of a superposition of wave vectors very close in direction and magnitude to the central wave vector of the wave packet. In most cases the ray simply moves in the direction defined by the central wave vector, i. e., normal to the orientation of the wave fronts. However, this is not true when the medium through which the light propagates is optically anisotropic, i. e., light traveling in different directions moves at different phase speeds. An example of such a medium is a calcite crystal. In the anisotropic case, the orientation of the ray can be determined once the dispersion relation for the waves in question is known, by using the techniques developed in the previous chapter.

If light moves through some apparatus in which all apertures are much greater in dimension than the wavelength of light, then we can use the above rule to follow rays of light through the apparatus. This is called the geometrical optics approximation.

Most of what we need to know about geometrical optics can be summarized in two rules, the laws of reflection and refraction. These rules may both be inferred by considering what happens when a plane wave segment impinges on a flat surface. If the surface is polished metal, the wave is reflected, whereas if the surface is an interface between two transparent media with differing indices of refraction, the wave is partially reflected and partially refracted. Reflection means that the wave is turned back into the half-space from which it came, while refraction means that it passes through the interface, acquiring a different direction of motion from that which it had before reaching the interface.

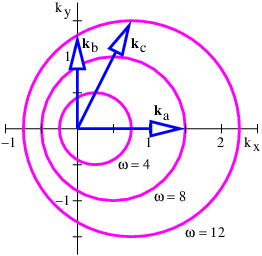

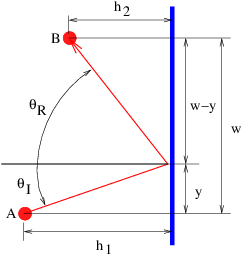

____________________________

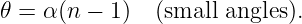

Figure 3.1 shows the wave vector and wave front of a wave being reflected from a plane mirror. The angles of incidence, θI, and reflection, θR, are defined to be the angles between the incoming and outgoing wave vectors respectively and the line normal to the mirror. The law of reflection states that θR = θI. This is a consequence of the need for the incoming and outgoing wave fronts to be in phase with each other all along the mirror surface. This plus the equality of the incoming and outgoing wavelengths is sufficient to insure the above result.

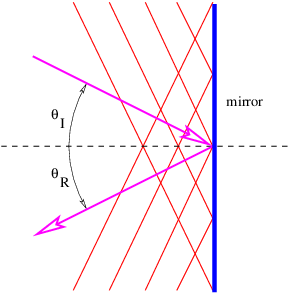

____________________________

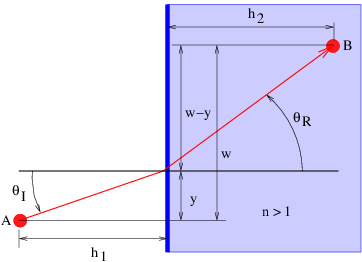

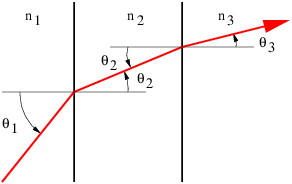

Refraction, as illustrated in figure 3.2, is slightly more complicated. Since nR > nI, the speed of light in the right-hand medium is less than in the left-hand medium. (Recall that the speed of light in a medium with refractive index n is cmedium = cvac∕n.) The frequency of the wave packet doesn’t change as it passes through the interface, so the wavelength of the light on the right side is less than the wavelength on the left side.

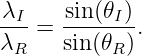

Let us examine the triangle ABC in figure 3.2. The side AC is equal to the side BC times sin(θI). However, AC is also equal to 2λI, or twice the wavelength of the wave to the left of the interface. Similar reasoning shows that 2λR, twice the wavelength to the right of the interface, equals BC times sin(θR). Since the interval BC is common to triangles ABC and DBC, we easily see that

| (3.1) |

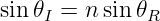

Since λI = cIT = cvacT∕nI and λR = cRT = cvacT∕nR where cI and cR are the wave speeds to the left and right of the interface, cvac is the speed of light in a vacuum, and T is the (common) period, we can easily recast the above equation in the form

| (3.2) |

This is called Snell’s law, and it governs how a ray of light bends as it passes through a discontinuity in the index of refraction. The angle θI is called the incident angle and θR is called the refracted angle. Notice that these angles are measured from the normal to the surface, not the tangent.

When light passes from a medium of lesser index of refraction to one with greater index of refraction, Snell’s law indicates that the ray bends toward the normal to the interface. The reverse occurs when the passage is in the other direction. In this latter circumstance a special situation arises when Snell’s law predicts a value for the sine of the refracted angle greater than one. This is physically untenable. What actually happens is that the incident wave is reflected from the interface. This phenomenon is called total internal reflection. The minimum incident angle for which total internal reflection occurs is obtained by substituting θR = π∕2 into equation (3.2), resulting in

| (3.3) |

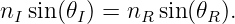

Notice that Snell’s law makes the implicit assumption that rays of light move in the direction of the light’s wave vector, i. e., normal to the wave fronts. As the analysis in the previous chapter makes clear, this is valid only when the optical medium is isotropic, i. e., the wave frequency depends only on the magnitude of the wave vector, not on its direction.

Certain kinds of crystals, such as those made of calcite, are not isotropic — the speed of light in such crystals, and hence the wave frequency, depends on the orientation of the wave vector. As an example, the angular frequency in an anisotropic medium might take the form

![[c2(kx + ky)2 c2(kx - ky)2]1∕2

ω = -1----------+ -2---------- ,

2 2](book171x.png) | (3.4) |

where c1 is the speed of light for waves in which ky = kx, and c2 is its speed when ky = -kx.

____________________________

Figure 3.3 shows an example in which a ray hits a calcite crystal oriented so that constant frequency contours are as specified in equation (3.4). The wave vector is oriented normal to the surface of the crystal, so that wave fronts are parallel to this surface. Upon entering the crystal, the wave front orientation must stay the same to preserve phase continuity at the surface. However, due to the anisotropy of the dispersion relation for light in the crystal, the ray direction changes as shown in the right panel. This behavior is clearly inconsistent with the usual version of Snell’s law!

It is possible to extend Snell’s law to the anisotropic case. However, we will not present this here. The following discussions of optical instruments will always assume that isotropic optical media are used.

Given the laws of reflection and refraction, one can see in principle how the passage of light through an optical instrument could be traced. For each of a number of initial rays, the change in the direction of the ray at each mirror surface or refractive index interface can be calculated. Between these points, the ray traces out a straight line.

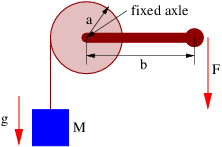

Though simple in conception, this procedure can be quite complex in practice. However, the procedure simplifies if a number of approximations, collectively called the thin lens approximation, are valid. We begin with the calculation of the bending of a ray of light as it passes through a prism, as illustrated in figure 3.4.

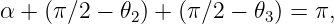

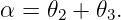

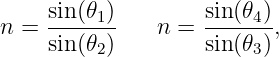

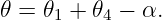

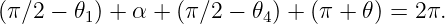

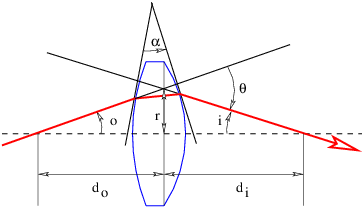

The pieces of information needed to find θ, the angle through which the ray is deflected, are as follows: the geometry of the triangle defined by the entry and exit points of the ray and the upper vertex of the prism leads to

| (3.5) |

which simplifies to

| (3.6) |

Snell’s law at the entrance and exit points of the ray tell us that

| (3.7) |

where n is the index of refraction of the prism. (The index of refraction of the surroundings is assumed to be unity.) One can also infer that

| (3.8) |

This comes from the fact that the the sum of the internal angles of the shaded quadrangle in figure 3.4 is

| (3.9) |

Combining equations (3.6), (3.7), and (3.8) allows the ray deflection θ to be determined in terms of θ1 and α, but the resulting expression is very messy. However, great simplification occurs if the following conditions are met:

With these approximations it is easy to show that

| (3.10) |

____________________________

Generally speaking, lenses and mirrors in optical instruments have curved rather than flat surfaces. However, we can still use the laws for reflection and refraction by plane surfaces as long as the segment of the surface on which the wave packet impinges is not curved very much on the scale of the wave packet dimensions. This condition is easy to satisfy with light impinging on ordinary optical instruments. In this case, the deflection of a ray of light is given by equation (3.10) if α is defined as the intersection of the tangent lines to the entry and exit points of the ray, as illustrated in figure 3.5.

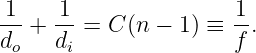

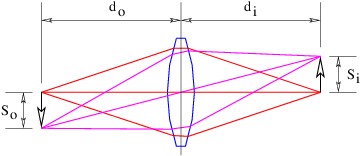

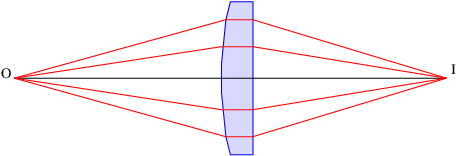

A positive lens is thicker in the center than at the edges. The angle α between the tangent lines to the two surfaces of the lens at a distance r from the central axis takes the form α = Cr, where C is a constant. The deflection angle of a beam hitting the lens a distance r from the center is therefore θ = Cr(n - 1), as indicated in figure 3.5. The angles o and i sum to the deflection angle: o + i = θ = Cr(n - 1). However, to the extent that the small angle approximation holds, o = r∕do and i = r∕di where do is the distance to the object and di is the distance to the image of the object. Putting these equations together and cancelling the r results in the thin lens equation:

| (3.11) |

The quantity f is called the focal length of the lens. Notice that f = di if the object is very far from the lens, i. e., if do is extremely large.

____________________________

Figure 3.6 shows how a positive lens makes an image. The image is produced by all of the light from each point on the object falling on a corresponding point in the image. If the arrow on the left is an illuminated object, an image of the arrow will appear at right if the light coming from the lens is allowed to fall on a piece of paper or a ground glass screen. The size of the object So and the size of the image Si are related by simple geometry to the distances of the object and the image from the lens:

| (3.12) |

Notice that a positive lens inverts the image.

An image will be produced to the right of the lens only if do > f. If do < f, the lens is unable to converge the rays from the image to a point, as is seen in figure 3.7. However, in this case the backward extension of the rays converge at a point called a virtual image, which in the case of a positive lens is always farther away from the lens than the object. The image is called virtual because it does not appear on a ground glass screen placed at this point. Unlike the real image seen in figure 3.6, the virtual image is not inverted. The thin lens equation still applies if the distance from the lens to the image is taken to be negative.

A negative lens is thinner in the center than at the edges and produces only virtual images. As seen in figure 3.8, the virtual image produced by a negative lens is closer to the lens than is the object. Again, the thin lens equation is still valid, but both the distance from the image to the lens and the focal length must be taken as negative. Only the distance to the object remains positive.

Curved mirrors also produce images in a manner similar to a lens, as shown in figure 3.9. A concave mirror, as seen in this figure, works in analogy to a positive lens, producing a real or a virtual image depending on whether the object is farther from or closer to the mirror than the mirror’s focal length. A convex mirror acts like a negative lens, always producing a virtual image. The thin lens equation works in both cases as long as the angles are small.

An alternate approach to geometrical optics can be developed from Fermat’s principle. This principle states (in its simplest form) that light waves of a given frequency traverse the path between two points which takes the least time. The most obvious example of this is the passage of light through a homogeneous medium in which the speed of light doesn’t change with position. In this case the shortest time corresponds to the shortest distance between the points, which, as we all know, is a straight line. Thus, Fermat’s principle is consistent with light traveling in a straight line in a homogeneous medium.

____________________________

Fermat’s principle can also be used to derive the laws of reflection and refraction. For instance, figure 3.10 shows a candidate ray for reflection in which the angles of incidence and reflection are not equal. The time required for the light to go from point A to point B is

![t = ([h2 + y2]1∕2 + [h2+ (w - y)2]1∕2)∕c

1 2](book180x.png) | (3.13) |

where c is the speed of light. We find the minimum time by differentiating t with respect to y and setting the result to zero, with the result that

![-----y----- = ------w---y------ .

[h21 + y2]1∕2 [h22 + (w - y)2]1∕2](book181x.png) | (3.14) |

However, we note that the left side of this equation is simply sin θI, while the right side is sin θR, so that the minimum time condition reduces to sin θI = sin θR or θI = θR, which is the law of reflection.

____________________________

A similar analysis may be done to derive Snell’s law of refraction. The speed of light in a medium with refractive index n is c∕n, where c is its speed in a vacuum. Thus, the time required for light to go some distance in such a medium is n times the time light takes to go the same distance in a vacuum. Referring to figure 3.11, the time required for light to go from A to B becomes

![2 2 1∕2 2 2 1∕2

t = ([h 1 + y ] + n [h2 + (w - y )] )∕c.](book182x.png) | (3.15) |

This results in the condition

| (3.16) |

where θR is now the refracted angle. We recognize this result as Snell’s law.

Notice that the reflection case illustrates a point about Fermat’s principle: The minimum time may actually be a local rather than a global minimum — after all, in figure 3.10, the global minimum distance from A to B is still just a straight line between the two points! In fact, light starting from point A will reach point B by both routes — the direct route and the reflected route.

____________________________

It turns out that trajectories allowed by Fermat’s principle don’t strictly have to be minimum time trajectories. They can also be maximum time trajectories, as illustrated in figure 3.12. In this case light emitted at point O can be reflected back to point O from four points on the mirror, A, B, C, and D. The trajectories O-A-O and O-C-O are minimum time trajectories while O-B-O and O-D-O are maximum time trajectories.

Fermat’s principle seems rather mysterious. However, the American physicist Richard Feynman made sense out of it by invoking an even more fundamental principle, as we now see.

If a light ray originates at point O in figure 3.12, reflects off of the ellipsoidal mirror surface at point A, and returns to point O, the elapsed time isn’t much different from that experienced by a ray which reflects off the mirror a slight distance from point A and returns to O. This is because at point A the beam from point O is perpendicular to the tangent to the surface of the mirror at point A. In contrast, the time experienced by a ray going from point O to point E and back would differ by a much greater amount than the time experienced by a ray reflecting off the mirror a slight distance from point E. This is because the tangent to the mirror surface at point E is not perpendicular to the beam from point O.

Technically, the change in the round trip time varies linearly with the deviation in the reflection point from point E, but quadratically with the deviation from point A. If this deviation is small in the first place, then the change in the round trip time will be much smaller for the quadratic case than for the linear case.

It seems odd that we would speak of a beam reflecting back to point O if it hit the mirror at any point except A, B, C, or D, due to the requirements of the law of reflection. However, recall that the law of reflection itself depends on Fermat’s principle, so we cannot assume the validity of that law in this investigation.

Feynman postulated that light rays explore all possible paths from one point to another, but that the only paths realized in nature are those for which light taking closely neighboring paths experiences nearly the same elapsed time (or more generally, traverses nearly the same number of wavelengths) as the original path. If this is true, then neighboring rays interfere constructively with each other, resulting in a much brighter beam than would occur in the absence of this constructive interference. Thus, the round-trip paths O-A-O, O-B-O, O-C-O, and O-D-O in figure 3.12 actually occur, but not O-E-O. Feynman explains Fermat’s principle by invoking constructive and destructive interference!

____________________________

Figure 3.13 illustrates a rather peculiar situation. Notice that all the rays from point O which intercept the lens end up at point I. This would seem to contradict Fermat’s principle, in that only the minimum (or maximum) time trajectories should occur. However, a calculation shows that all the illustrated trajectories in this particular case take the same time. Thus, the light cannot choose one trajectory over another using Fermat’s principle and all of the trajectories are equally favored. Note that this inference applies not to just any set of trajectories, but only those going from an object point to the corresponding image point.

_____________________________________

_____________________________________

Albert Einstein invented the special and general theories of relativity early in the 20th century, though many other people contributed to the intellectual climate which made these discoveries possible. The special theory of relativity arose out of a conflict between the ideas of mechanics as developed by Galileo and Newton, and the theory of electromagnetism. For this reason relativity is often discussed in textbooks after electromagnetism is developed. However, special relativity is actually a valid extension to the Galilean world view which is needed when objects move at very high speeds, and it is only coincidentally related to electromagnetism. For this reason we discuss relativity before electromagnetism.

The only fact from electromagnetism that we need is introduced now: There is a maximum speed at which objects can travel. This is coincidentally equal to the speed of light in a vacuum, c = 3 × 108 m s-1. Furthermore, a measurement of the speed of a particular light beam yields the same answer regardless of the speed of the light source or the speed at which the measuring instrument is moving.

This rather bizarre experimental result is in contrast to what occurs in Galilean relativity. If two cars pass a pedestrian standing on a curb, one at 20 m s-1 and the other at 50 m s-1, the faster car appears to be moving at 30 m s-1 relative to the slower car. However, if a light beam moving at 3 × 108 m s-1 passes an interstellar spaceship moving at 2 × 108 m s-1, then the light beam appears to occupants of the spaceship to be moving at 3 × 108 m s-1, not 1 × 108 m s-1. Furthermore, if the spaceship beams a light signal forward to its (stationary) destination planet, then the resulting beam appears to be moving at 3 × 108 m s-1 to instruments at the destination, not 5 × 108 m s-1.

The fact that we are talking about light beams is only for convenience. Any other means of sending a signal at the maximum allowed speed would result in the same behavior. We therefore cannot seek the answer to this apparent paradox in the special properties of light. Instead we have to look to the basic nature of space and time.

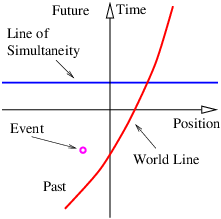

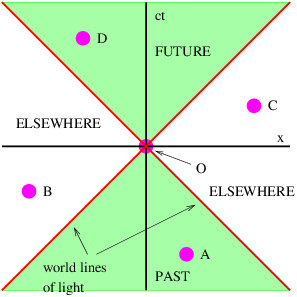

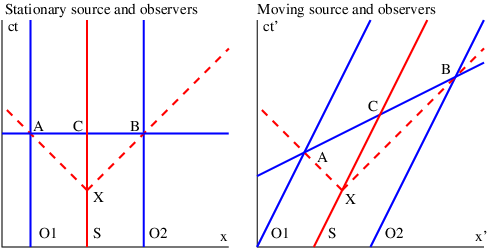

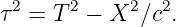

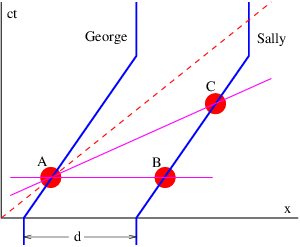

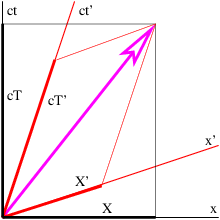

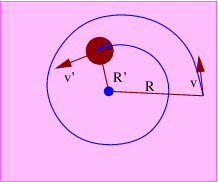

____________________________

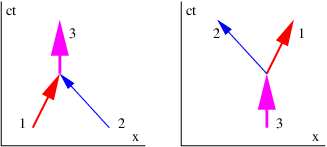

In order to gain an understanding of both Galilean and Einsteinian relativity it is important to begin thinking of space and time as being different dimensions of a four-dimensional space called spacetime. Actually, since we can’t visualize four dimensions very well, it is easiest to start with only one space dimension and the time dimension. Figure 4.1 shows a graph with time plotted on the vertical axis and the one space dimension plotted on the horizontal axis. An event is something that occurs at a particular time and a particular point in space. (“Julius X. wrecks his car in Lemitar, NM on 21 June at 6:17 PM.”) A world line is a plot of the position of some object as a function of time on a spacetime diagram, although it is conventional to put time on the vertical axis. Thus, a world line is really a line in spacetime, while an event is a point in spacetime. A horizontal line parallel to the position axis is a line of simultaneity in Galilean relativity — i. e., all events on this line occur simultaneously.

In a spacetime diagram the slope of a world line has a special meaning. Notice that a vertical world line means that the object it represents does not move — the velocity is zero. If the object moves to the right, then the world line tilts to the right, and the faster it moves, the more the world line tilts. Quantitatively, we say that

| (4.1) |

in Galilean relativity. Notice that this works for negative slopes and velocities as well as positive ones. If the object changes its velocity with time, then the world line is curved, and the instantaneous velocity at any time is the inverse of the slope of the tangent to the world line at that time.

The hardest thing to realize about spacetime diagrams is that they represent the past, present, and future all in one diagram. Thus, spacetime diagrams don’t change with time — the evolution of physical systems is represented by looking at successive horizontal slices in the diagram at successive times. Spacetime diagrams represent evolution, but they don’t evolve themselves.

The principle of relativity states that the laws of physics are the same in all inertial reference frames. An inertial reference frame is one that is not accelerated. Reference frames attached to a car at rest and to a car moving steadily down the freeway at 30 m s-1 are both inertial. A reference frame attached to a car accelerating away from a stop light is not inertial.

The principle of relativity is an educated guess or hypothesis based on extensive experience. If the principle of relativity weren’t true, we would have to do all our calculations in some preferred reference frame. This would be very annoying. However, the more fundamental problem is that we have no idea what the velocity of this preferred frame might be. Does it move with the earth? That would be very earth-centric. How about the velocity of the center of our galaxy or the mean velocity of all the galaxies? Rather than face the issue of a preferred reference frame, physicists have chosen to stick with the principle of relativity.

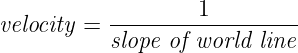

____________________________

If an object is moving to the left at velocity v relative to a particular reference frame, it appears to be moving at a velocity v′ = v - U relative to another reference frame which itself is moving at velocity U. This is the Galilean velocity transformation law, and it is based on everyday experience. If you are traveling 30 m s-1 down the freeway and another car passes you doing 40 m s-1, then the other car moves past you at 10 m s-1 relative to your car.

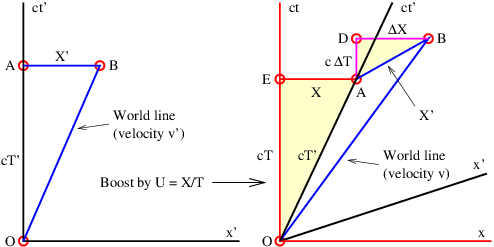

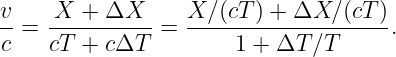

Figure 4.2 shows how the world line of an object is represented differently in the unprimed (x, t) and primed (x′, t′) reference frames. The difference between the velocity of the object and the velocity of the primed frame (i. e., the difference in the inverses of the slopes of the corresponding world lines) is the same in both reference frames in this Galilean case. This illustrates the difference between a physical law independent of reference frame (the difference between velocities in Galilean relativity) and the different motion of the object in the two different reference frames.

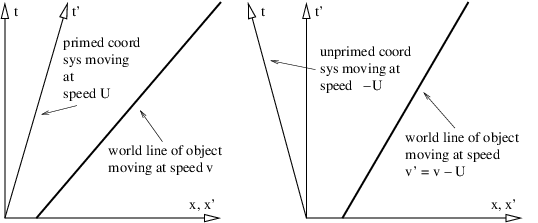

In special relativity we find that space and time “mix” in a way that they don’t in Galilean relativity. This suggests that space and time are different aspects of the same “thing”, which we call spacetime.

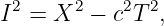

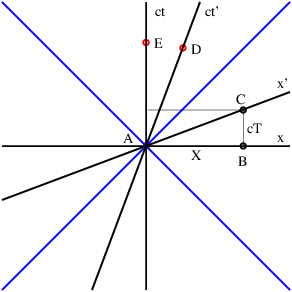

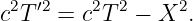

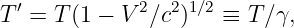

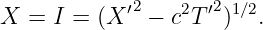

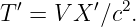

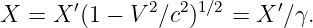

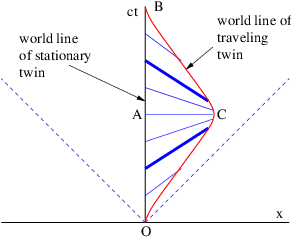

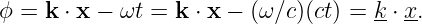

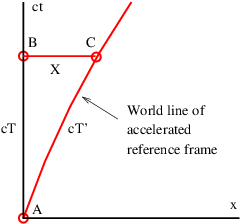

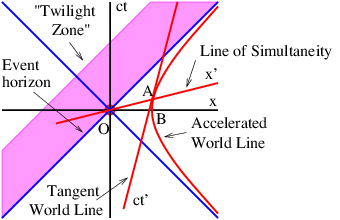

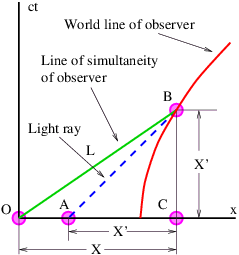

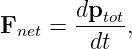

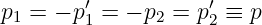

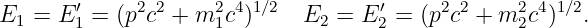

If time and position are simply different dimensions of the same abstract space, then they should have the same units. The easiest way to arrange this is to multiply time by the maximum speed, c, resulting in the kind of spacetime diagram shown in figure 4.3. Notice that world lines of light have slope ±1 when the time axis is scaled this way. Furthermore, the relationship between speed and the slope of a world line must be revised to read

| (4.2) |

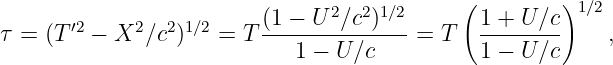

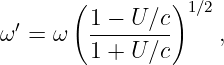

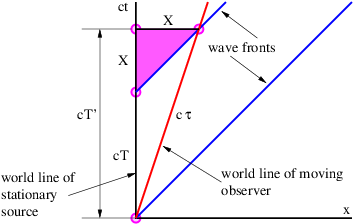

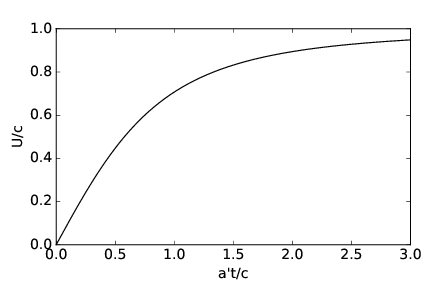

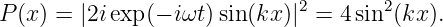

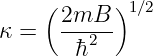

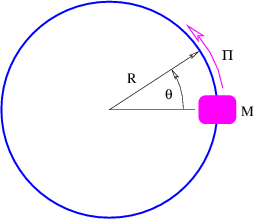

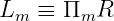

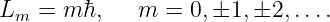

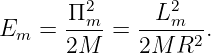

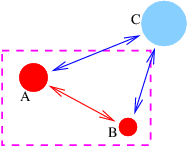

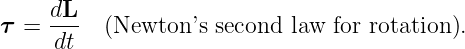

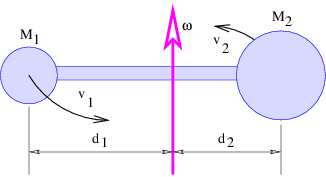

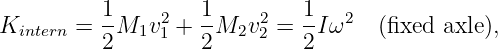

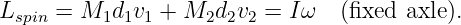

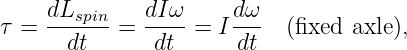

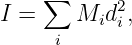

____________________________